Provocations

What if you could chat with personas?

Exploring how generative AI could superpower research outputs to foster greater empathy and engagement

With the release of GPT-4 and the growing interest in open-source generative AIs such as DALL-E 2, Midjourney, and more, there is no dearth of people writing about and commenting on the potential positive and negative impacts of AI, and how it might change work in general and design work specifically. As we sought to familiarize ourselves with many of these tools and technologies, we immediately recognized the potential risks and dangers, but also the prospects for generative AI to augment how we do research and communicate findings and insights.

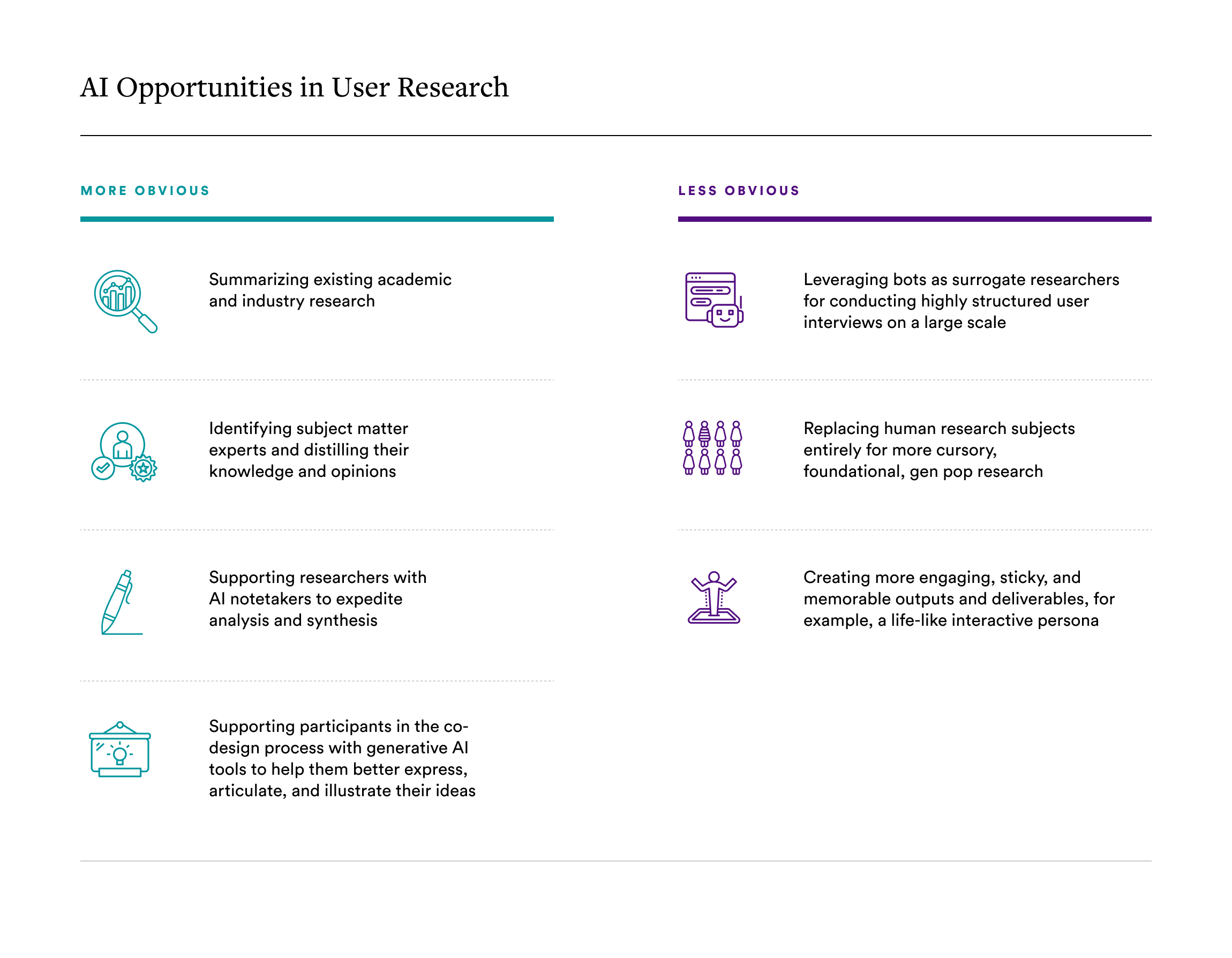

Looking at some of the typical methods and deliverables of the human-centered design process, we not only saw practical opportunities for AI to support our work in the nearer term, but also some more experimental, less obvious (and, in some cases, potentially problematic) opportunities further out in the future.

Now, while each of the above use cases merits its own deep dive, in this article we want to focus on how advances in AI could potentially transform one common, well-established output of HCD research: the persona.

Breathing new life into an old standard

A persona is a fictional, yet realistic, description of a typical or target user of a product. It’s an archetype based on a synthesis of research with real humans that summarizes and describes their needs, concerns, goals, behaviors, and other relevant background information.

Personas are meant to foster empathy for the users for whom we design and develop products and services. They are meant to support designers, developers, planners, strategists, copywriters, marketers, and other stakeholders build greater understanding and make better decisions grounded in research.

But personas tend to be flat, static, and reductive—often taking the form of posters or slide decks and highly susceptible to getting lost and forgotten on shelves, hard drives, or in the cloud. Is that the best we can do? Why aren’t these very common research outputs of the human-centered design process, well, a little more “alive” and engaging?

Peering into a possible future with “live personas”

Imagine a persona “bot” that not only conveys critical information about user goals, needs, behaviors, and demographics, but also has an image, likeness, voice, and personality? What if those persona posters on the wall could talk? What if all the various members and stakeholders of product, service, and solution teams could interact with these archetypal users, and in doing so, deepen their understanding of and empathy for them and their needs?

In that spirit, we decided to use currently available, off-the-shelf, mostly or completely free AI tools to see if we could re-imagine the persona into something more personal, dynamic, and interactive—or, what we’ll call for now, a “live persona.” What follows is the output of our experiments.

As you’ll see in the video below, we created two high school student personas, abstracted and generalized from research conducted in the postsecondary education space. One is more confident and proactive; the other more anxious and passive.

Now, without further ado, meet María and Malik:

Looking a bit closer under the hood

Each of our live personas began as, essentially, a chatbot. We looked at tools like Character.ai and Inworld, and ultimately built María and Malik in the latter. Inworld is intended to be a development platform for game characters, but many of the ideas and capabilities in it are intriguing in the context of personas, like personality and mood attributes that are adjustable, personal and common knowledge sets, goals and actions, and scenes. While we did not explore all those features, we did create two high school student personas representing a couple “extremes” with regards to thinking about and planning their post-secondary future: a more passive and uncertain María and a more proactive and confident Malik.

Here’s a peek at how we created Malik from scratch:

Interacting with María and Malik, it was immediately evident how these two archetypes were similar and different. But they still felt a tad cartoonish and robotic. So, we took some steps to improve progressively on their appearance, voices, and expressiveness.

Here’s a peek at how we made María progressively more realistic by combining several different generative AI and other tools:

Eyeing the future cautiously

The gaming industry is already leading in the development of AI-powered characters, so it certainly seems logical to consider applying many of those precedents, principles, tools, and techniques to aspects of our own work in the broader design of solutions, experiences, and services. Our experimentation with several generative AI tools available today shows that it is indeed possible to create relatively lifelike and engaging interactive personas—though perhaps not entirely efficiently (yet). And, in fact, we might be able to do more than just create individual personas to chat with; we could create scenes or even metaverse environments containing multiple live personas that interact with each other and then observe how those interactions play out. In this scenario, our research might inform the design of a specific service or experience (e.g., a patient-provider interaction or a retail experience). Building AI-powered personas and running “simulations” with them could potentially help design teams prototype a new or enhanced experience.

But, while it’s fun and easy to imagine more animated, captivating research and design outputs utilizing generative AI, it’s important to pause and appreciate the numerous inherent risks and potential unintended consequences of AI—practical, ethical, and otherwise. Here are just a few that come to mind:

- Algorithmically-generated outputs could perpetuate biases and stereotypes because AIs are only as good as the data they are trained on.

- AIs are known to have hallucinations, in which they may respond over-confidently in a way that doesn’t seem justified or aligned with their training data—or, as we’ve additionally configured, with the definitions, descriptions, and parameters of an AI-powered persona. Those hallucinations, in turn, could influence someone to make a product development decision that might unintentionally cause harm or disservice.

- AIs could be designed to continuously learn and evolve over time, taking in all previous conversations and potentially steering users towards the answers they think they’d want rather than reflecting the data they were originally trained on. This would negate the purpose of the outputs and could result in poor product development decisions.

- People could develop a deep sense of connection and emotional attachment to AIs that look, sound, and feel humanlike—in fact, they already have. It’s an important first principle that AIs be transparent and proactively communicate that they are AIs, but when the underlying models become more and more truthful and they are embodied in more realistic and charismatic ways, then it becomes more probable that users might develop trust and affinity towards them. Imagine how much more potentially serious a hallucination becomes, even if a bot states upfront that it is fictitious and powered by AI!

Finally, do we even really want design personas that have so much to say?! Leveraging generative AI in any of these ways, without thoughtful deliberation, could ultimately lead us to over-index on attraction and engagement with the artifact at the expense of its primary purpose. Even if we could “train” live personas to accurately reflect the core ideas and insights that are germane to designing user-centered products and services, would giving them the gift of gab just end up muddling the message?

In short, designing live personas would have to consider these consequences very carefully. Guardrails might be needed, such as limiting the types of questions and requests that a user may ask the persona, making the persona “stateless” so it can’t remember previous conversations, capping the amount of time users can interact with the persona, and having the persona remind the user that they are fictitious at various points during a conversation. Ultimately, personas must remain true to their original intent and accurately represent the research insights and data that bore them.

And further, even if applying generative AI technologies in these ways becomes sufficiently accessible and cost-effective, it will still behoove us to remember that they are still only tools that we might use as part of our greater research and design processes, and that we should not be over-swayed nor base major decisions on something a bot says, as charming as they might be.

Though it’s still early days, what do you think about the original premise? Could Al-enabled research outputs that are more interactive and engaging actually foster greater empathy and understanding of target end-users and could that lead to better strategy, design, development, and implementation decisions? Or will the effort required, and possible risks of AI-enabled research outputs outweigh their possible benefits?

Read Next: