Amplifying empathy through AI

The future of AI can help, rather than hinder, our capacity for understanding and compassion.

Provocation

Outsourcing empathy to AI erodes our ability to understand others.

We live in a world that increasingly lacks empathy, visible in how we interact with one another. Digital exchanges hit more harshly. Public discourse feels more polarized. Small misunderstandings escalate fast. These outcomes are not inevitable. They emerged from systems and services designed to prioritize speed and efficiency, often at the expense of pause, context, and care.

As AI becomes increasingly embedded in our everyday interactions, we approach new heights of efficiency – from drafting messages and moderating conversations to offering advice and standing in as emotional support. Using AI for these interactions reduces friction and accelerates response, but it also unintentionally eliminates the moments that invite reflection and accountability, which underpin our capacity for empathy. Without these moments of pause, our ability to understand and care for one another will gradually atrophy.

AI does not have to accelerate this erosion of empathy. Designed intentionally, it can amplify empathy rather than diminish it. It can help people slow down rather than disengage, reflect rather than react, and strengthen rather than replace the human capacities that make empathy possible.

AI does not have to accelerate this erosion of empathy. Designed intentionally, it can amplify empathy rather than diminish it.

Designing AI with intent can build on our ability to empathize.

Building empathy is predicated on a repeated practice of sensemaking, gauging the impact of our own behaviors, and intentional decision-making. Empathy grows and strengthens when people have the space to practice these skills and eventually becomes a positive habit that influences and benefits the collective.

Our framework visualizes how individual skills set the foundation for a society with a more expanded capacity for empathy. At the individual level, the framework is grounded in a set of core human skills that build on one another as people move through the phases.

As individuals strengthen these skills, our society will be able to respond to disagreement and difference with more understanding and compassion.

Making sense of the context

This phase is about orientation. Before people can act thoughtfully, they need context—an understanding of the situation, the forces at play, and what’s at stake.

Core skills

Self-reflection: Noticing one’s own actions, assumptions, and role in a situation

Understanding: Grasping broader context, tradeoffs, and consequences of one’s actions

Awareness of impact and action

In this phase, people begin to recognize their own role within a situation and how their actions may affect others. This awareness extends beyond intent to actual impact.

Core skills

Openness: Being curious, questioning assumptions, and considering alternative perspectives

Responsibility: Owning choices and their effects on others

Decision-making with intention

This phase marks a shift to taking responsibility for one’s decisions.

Core skills

Consideration: Anticipating and taking into account how your words and actions may make other people feel

Connection: Communicating thoughtfully and repairing misalignment

Responsiveness: Acting proportionally and appropriately in the moment and adjusting on the go

As technologies like AI become increasingly present in our lives, there is an opportunity for AI to build empathy rather than erode as it tends to today.

AI as a coach, not a participant.

Today, when AI is invoked in situations that require empathy, it is designed to behave more like an active participant, generating content or giving advice. We may assume a future in which AI communicates on our behalf and makes decisions for us. And while this future is made to feel like a relief, it comes with tradeoffs. When expression and interaction are outsourced, we lose opportunities to practice capacities such as reflection, understanding, and responsibility.

Instead of performing empathy on our behalf, as AI does today, we asked: how can AI participate in a more meaningful way to help people build their capacities for empathy, more like a coach? When we shift the focus of AI from content generation to coaching, a different, better future emerges. In this future, AI creates space for sensemaking and awareness, supports more intentional decision-making, and reinforces positive habits over time with the goal of guiding individuals in their journeys to be more empathetic.

How might AI help people learn and expand their capacity to empathize with other people?

In a series of short vignettes rooted in everyday human situations, we explored how AI can help to create the conditions fertile for practicing empathy. Notice that in each scenario, AI does not resolve the situation for the person. Instead, it slows the moment down, surfaces context, or creates space for reflection, supporting the human in making a more intentional, empathetic choice.

These vignettes serve as provocations or conversation starters. Each of these vignettes raises questions about surveillance, privacy, and other issues that are not the focal point here. Finally, while these vignettes sketch ways AI may be built to amplify empathy, we recognize that there are many non-AI, non-tech solutions to amplify empathy.

Conversations can be uncomfortable.

You’ve been dating someone for three months. You know it’s not working out, but you don’t know what to say. You really don’t want to hurt the person you’re dating. So you ask AI to write a breakup message for you.

Currently, standard AI behavior would generate the text without hesitation. Boom. Done. Sent. No discomfort necessary.

But the unintended consequence? We’re training ourselves to outsource emotional labor.

Avoiding that discomfort means you never learn how to navigate it.

And next time? You’ll likely consider outsourcing it again.

What if AI refused to write your breakup text?

Responsibility requires owning our words.

Discomfort is not a flaw. It is the emotional labor of clarifying what you feel, taking responsibility for your decision, and choosing words that reflect care.

AI can help us process what we want to say—while keeping the words ours.

Rather than acting as a shortcut, AI helps us reflect on what we want to say and how, without taking ownership of the words themselves.

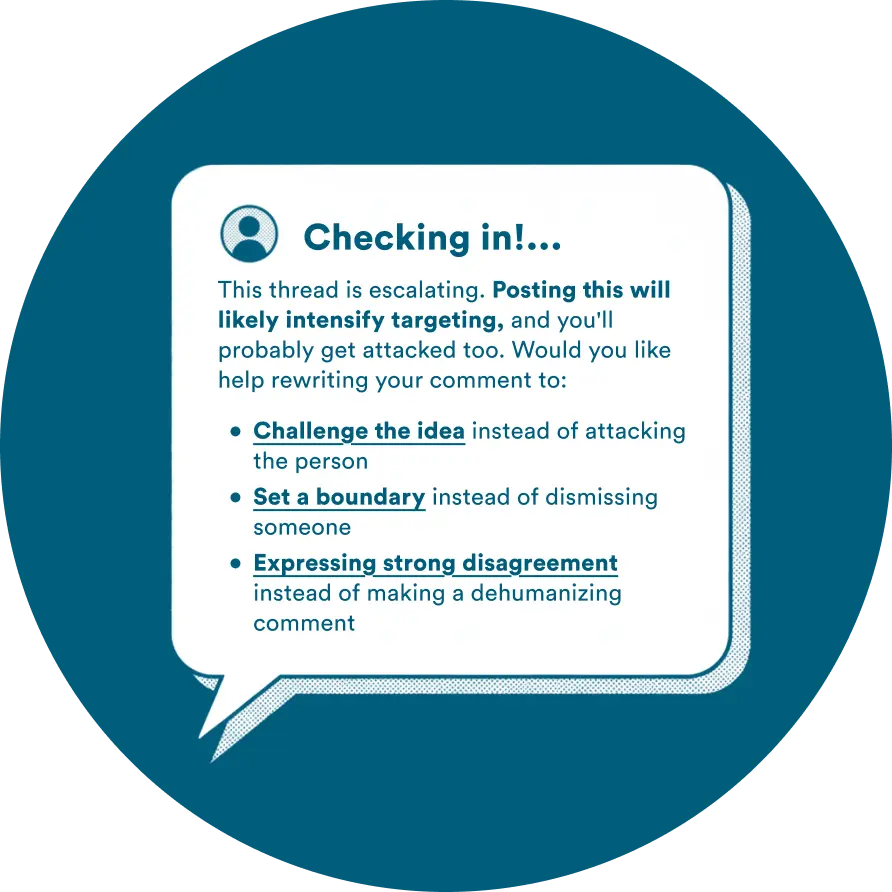

Social media can make your blood boil.

You’re browsing social media when you come across a viral post that’s politically charged—and a comment that is especially irritating. You start typing an emotional “clap back” so the commentor feels as dismissed as you do.

Your cursor hovers over ‘post.’

Currently, platform algorithms surface inflammatory content because it drives engagement, and engagement drives revenue.

The result? An environment that consistently rewards fast, emotional responses. In this context, even brief exchanges can escalate quickly.

But learning to pause and choosing how you respond, rather than just reacting, resists systems that reward escalation.

What if AI checked in with you in an emotionally reactive moment?

Less reactivity allows for intentional response.

AI can interrupt reactive escalation without demanding emotional alignment. You may still disagree, but you are supported in choosing a proportionate response that reduces harm rather than amplifies it.

AI can intervene at moments of escalation to slow reaction and surface more intentional response options.

Over time, these interruptions help us recognize patterns and internalize more reflective responses when the stakes are high.

Stress can overwhelm our better instincts.

You’re at the Department of Motor Vehicles (DMV) for the third time in three weeks. This time, it’s a different clerk and a different set of missing paperwork.

The fluorescent lights, endless lines, and loud noises overload your senses. Last time you were here, you said some mean things to the clerk that you regret.

You don’t notice it at first: your heart is racing, your body temperature rising, and your fists clenched before you’ve even interacted with anyone at the DMV.

What you need now is to slow down and notice what’s happening in your body so you don’t say something regretful–again.

This simple act of awareness is the first step to regulating your emotions and approaching a fraught situation more thoughtfully.

What if AI helped you notice and regulate stress before it takes over?

Awareness enables us to act responsibly.

By tracking signals like heart rate and location, AI can surface patterns that make moments of heightened emotion easier to recognize.

AI can bring awareness to how we react in specific situations and offer techniques for coping with challenging emotions in healthier ways.

That awareness supports regulation before interaction, increasing the likelihood of responsible action.

Tense situations can be hard to interpret.

You’re standing at a crowded bus stop. You notice two people arguing—raised voices, expressive faces, and lots of gesturing. You want to do something, but questions surface immediately:

What’s happening? Is it safe to intervene? If so, what should I do?

In moments like this, AI systems jump to conclusions before we even have a chance to observe, think, and form our own interpretations.

Drawing on spoken language and body cues, these systems often translate complex interactions into simplified labels such as ‘risk’ or ‘threat’.

By deciding what a moment “means,” AI interrupts the human work of noticing and understanding.

What if AI helped us make sense of a situation before rushing to label it?

Understanding begins with observation, not assumption.

By helping us observe and understand before interpreting people’s behavior, AI acts as an unbiased guide rather than an informant.

It can guide our attention to relevant facets of a situation and engage our critical observation skills before deciding to take action.

AI supports careful observation, making space for more informed human judgment to unfold.

Designed this way, AI helps us reflect on our assumptions and decide whether—and how—to engage with care.

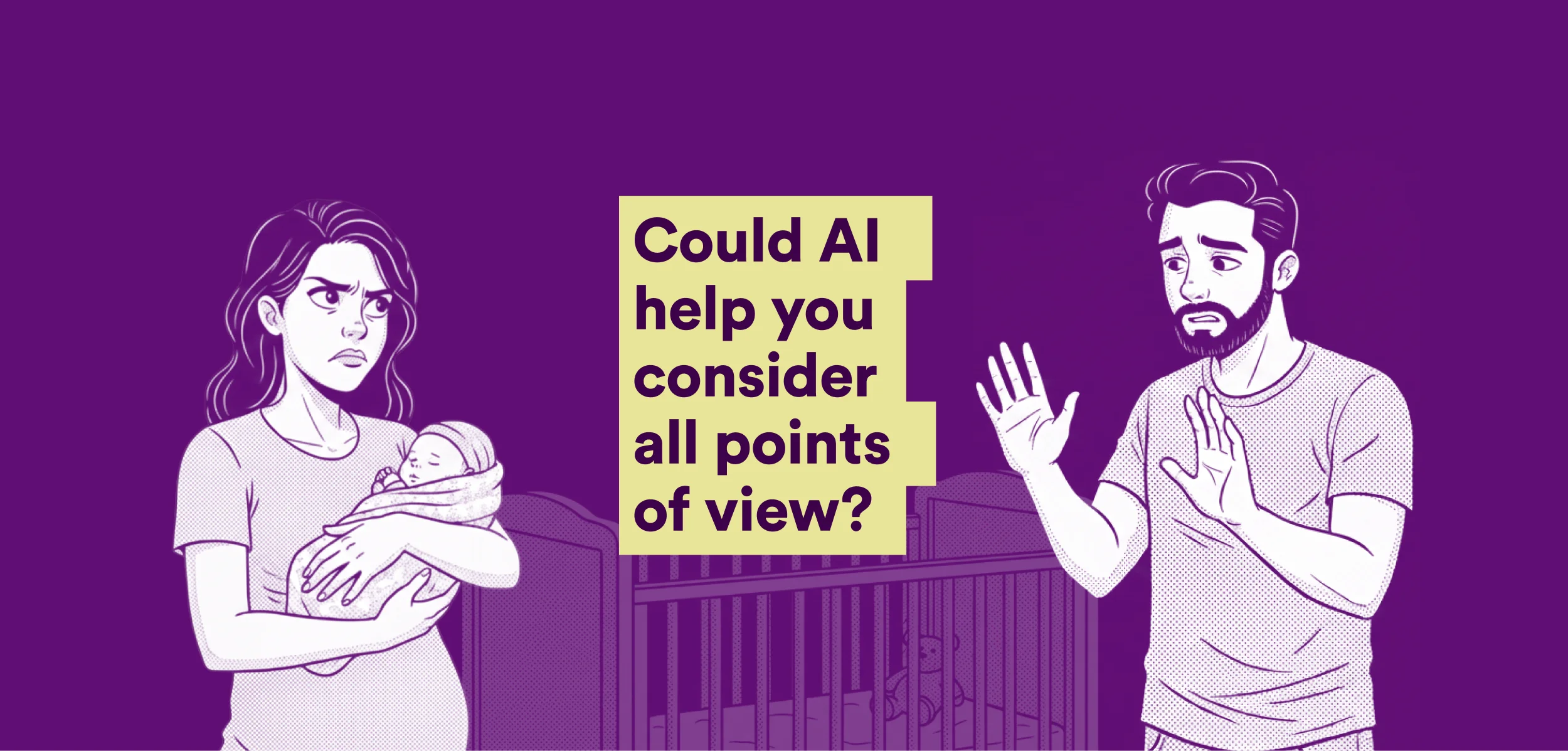

When exhaustion collides, perspective narrows.

You recently welcomed a new baby, and now you are both exhausted. One of you talks about how hard the nights have been, but the other bristles, feeling unseen for their own sacrifices.

Voices rise…Suddenly, the argument isn’t about sleep at all—it’s about whose exhaustion counts.

These moments are universal: miscommunication sparks conflict, “I’m struggling” becomes “I’m struggling more.” Both of you retreat into your respective corners, seeking validation for your perspective or preparing a case for the next round of the argument, causing further division.

Today, most AI systems are designed for single-user input, affirming a single perspective at a time.

With flattering tendencies, AI often reinforces your point of view without challenging it.

The unintended consequence is subtle but significant: when we turn to AI instead of each other, we become more entrenched in our own experience and further from understanding someone else’s.

What if AI could hold space for more than one person at a time?

Openness to multiple perspectives enables shared understanding.

If AI allowed input from all sides rather than a sole contributor, it could help consider each experience without forcing them into competition.

When multiple perspectives are visible at the same time, conflict no longer revolves around whose experience matters more. We’re better able to choose engagement that acknowledges difference, rather than defaulting to defensiveness.

By making space for more than one person at a time, AI can help us engage with one another without competing for validation.

What have you noticed about how AI systems are being built or exist around you? How do they diminish or encourage our capacity for empathy?

We have a choice to make…

The products, systems, and services we design can either expand our capacity for empathy or make it easier to bypass altogether. If we choose empathy, how might we design our systems to encourage greater noticing, reflection, accountability, and care in our responses?

Read Next: